Adaptive Observation for Probabilistic Search of a Randomly Moving Target

Overview

This project aims to optimize controls of autonomous agents searching for randomly moving targets (non-evasive and evasive). Computational frameworks are proposed to iteratively confine control strategies, balancing the information gathering and agents mobility. We present three complementary developments: (i) a continuous-discrete observation framework, (ii) a time-periodic search strategy tailored for non-evasive targets, and (iii) a collaborative search approach for tracking evasive targets. An example of probabilistic search, on finding the lost pet, is shown in Figure 1 below.

Keywords: Probabilistic search theory · Stochastic dynamics · PDE-constrained optimization · Adjoint analysis

Motivation

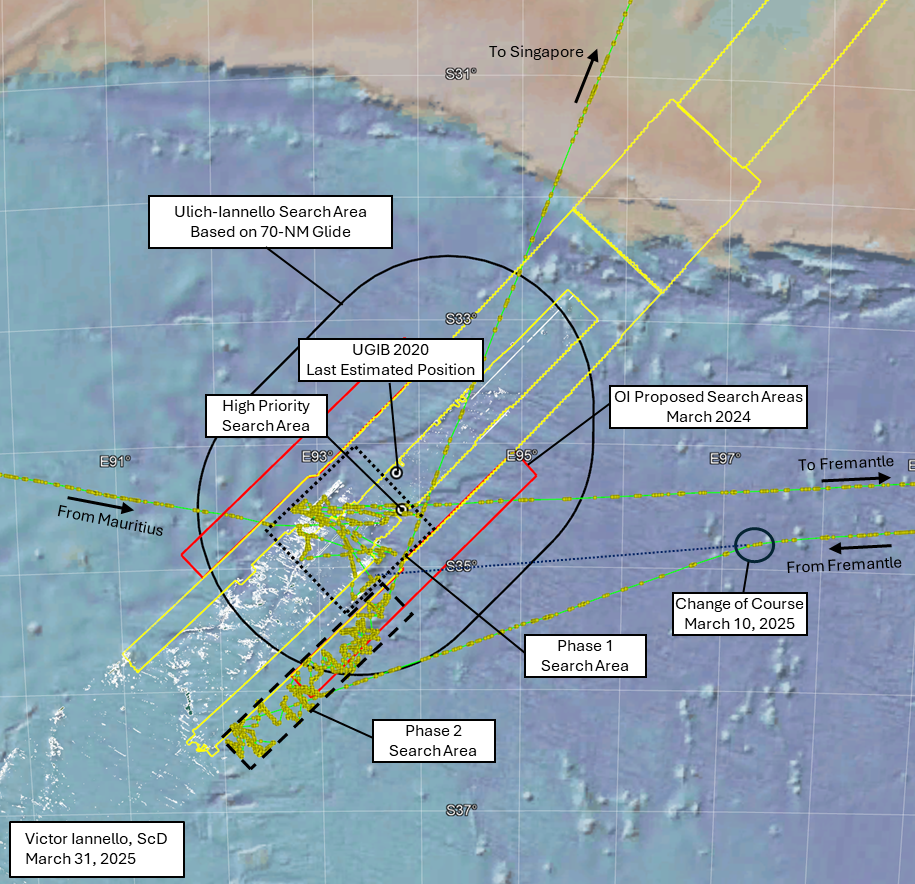

Search problems are common in both military and civilian domains—from rescuing missing persons, pets or wreckage like MH370 (non-evasive randomly moving targets), to tracking fugitives or foraging animals (evasive randomly moving targets). Unfortunately, many traditional methods are limited in adaptability and struggle in uncertain environments. Figure 2a and 2b below showcase the real-world challenges, even just for a stationary target shown in Figure 2a, often resorting to predetermined or exhaustive sweeps.

Key challenges in theory

Previous state-of-the-art work in probabilistic search has several limitations:

- Phelps et al. [2014] considers only uncertainty in the target’s initial location, neglecting uncertainty in its motion;

- Hellman [1970] focuses on optimizing search trajectories, but ignores dynamic feasibility of searchers;

- Ohsumi [1991] introduces dynamic programming for optimal control, but is computationally intensive and impractical for complex models;

In contrast, the computational frameworks developed in this project will not only tackle the aforementioned challenges, but will also propose modeling for non-evasive target, or even evasive.

Methodology and Numerical Results

The crux of this project is adaptive observation, a framework that couples estimation and control. The estimation task involves modeling the moving target’s likely position as a stochastic process, accounting for the uncertainty in both its initial location and dynamics. The control task involves decision-making and coordination of searchers as they scan their surroundings to locate the target. Crucially, these two components are organically coupled: searchers’ observations not only inform the evolving estimate of the target’s location, but also respond to it, forming a “feedback loop” that iteratively drives both estimation and control tasks. This idea is illustrated in Figure 3 below.

In this homepage, we focus on showcasing numerical results. For detailed mathematical background—including the modeling of the target’s motion via stochastic differential equations, the evolution of the target’s probability density function (PDF) using Fokker-Planck equation, and the formulation of the optimal control problem—please click the blue section titles below for more details.

Part 1 Continuous-discrete observation

We first consider a hybrid search framework in which the target’s motion evolves continuously in time, while observations are made at discrete time steps. The core challenge lies in optimizing searcher controls under uncertainty while accounting for the evolving probability density function (PDF) of the target’s position.

Part 2 Optimized periodic orbits

Inspired by the periodic-like trajectories obtained from optimizing hybrid search strategy as shown in Figure 4 above, Zhao and Bewley [2025] investigates a periodic search strategy for randomly moving targets. By optimizing the controls—and thus periodic trajectories—of multiple autonomous agents, the approach efficiently shapes the long-term probability landscape to maximize detection rate under uncertainty.

Part 3 Collaborative search strategy

Under construction

Part 4 Probabilistic search for grid-based Bayesian estimation exploiting sparsity

Theory and code under development…

Conclusion

We presents principled frameworks for optimizing controls of autonomous agents searching for randomly movingtargets. This project concludes:

- The proposed framework can efficiently locate the unseen target even under mobile uncertainty;

- There is enough control authority for optimizing searchers controls (and thus, trajectories), and the proposed adjoint analysis can guide the control synthetics;

- The proposed periodic and collaborative search strategy can efficiently find the evasive target, balancing the spatial coverage with control efficiency.

Numerical results demonstrate fast convergence of the objective and control inputs, as well as successful periodicity enforcement via adjoint-based gradient optimization. This approach is particularly well-suited for long-duration, passive monitoring tasks such as rescue-and-save missions, persistent surveillance, and environmental monitoring.

References

- Chris Phelps, Qi Gong, Johannes O. Royset, Claire Walton, and Isaac Kaminer. Consistent approximation of a nonlinear optimal control problem with uncertain parameters . Automatica, 50(12):2987–2997, 2014.

- Olavi Hellman. On the effect of a search upon the probability distribution of a target whose motion is a diffusion process . The Annals of Mathematical Statistics 41.5 (1970): 1717-1724.

- Akira Ohsumi. Optimal search for a Markovian target . Naval Research Logistics (NRL), 38(4):531–554, 1991.

- Muhan Zhao and Thomas R. Bewley, Probabilistic search for randomly moving targets using optimized time-periodic orbits, under review at Automatica since Feb. 2025.